Google Gemini, Bing Chat, ChatGPT, and Perplexity are excellent LLM-based applications. They can process text, generate images, browse the internet, and execute generated source code. However, while these applications' design and source code are proprietary, they are not built using a single prompt or LLM.

AI Application Complexity

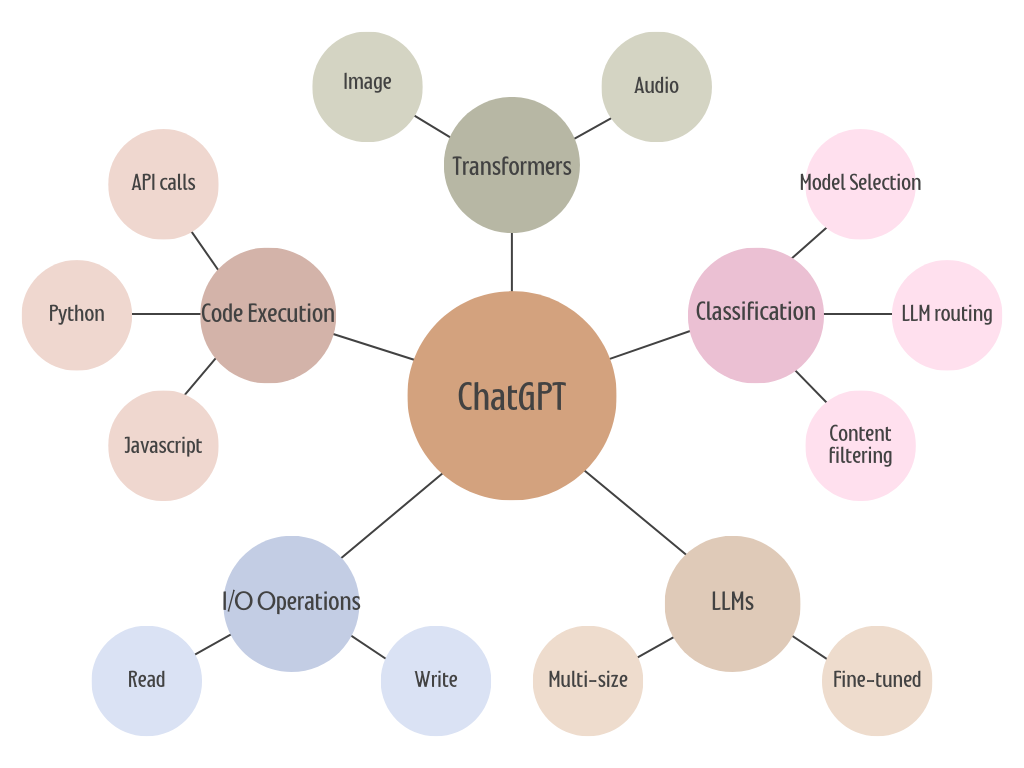

Today's most popular AI-based applications use a variety of LLMs, non-LLM classification models, transformers (image, audio, video), code execution libraries, API calls, and system/IO functionality. All these different technologies are being called seamlessly through sound engineering and product decisions that are invisible to the front-end user.

The following foundational models and libraries are currently used:

- Classification

- multi-model selection (text, image, video, etc.)

- fine-tuned LLM routing

- content filtering (explicit/against terms)

- LLMs

- specific fine-tuned models

- LLMs of various sizes - depending on the use case

- Transformers

- Image description generation

- Image generation

- Audio generation

- Video description generation

- Video generation

- Code/system execution

- Python code execution (eval() or VM-based)

- API calls

- IO read/write file operations

A one-size-fits-all AI model and silver bullets do not exist. Building products that generate real value is not easy - and often requires complex integrations, multiple technologies, and domain expert understanding.

Our ability to work and iterate quickly with various AI models, LLMs, and prompts will help us move faster, deliver value faster, push through the noise, and focus on delivering value to end-users.