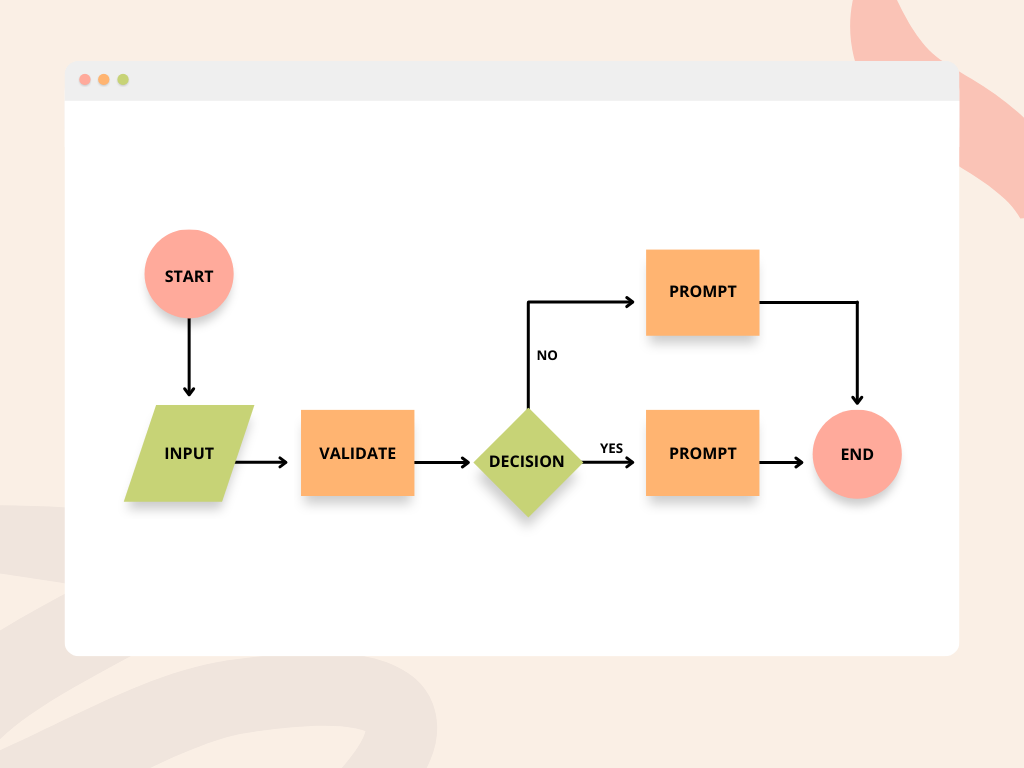

Building prompt-based applications with LLMs will require us to use raw user input and input outside of our control (websites, documents, etc.). It may be beneficial for us to classify these inputs to:

- Classify input for security purposes (input validation)

- Classify input for product business-logic purposes Prompt routing and input validation are essential to building secure, robust AI applications.

Traditional Input Validation

Input and data validation are fundamental security controls that prevent applications from being misused, misconfigured, and exploited. Various data validation libraries exist for languages like Pydantic for Python and Joi for Javascript. However, these libraries are often limited to bespoke RegEx-style validation and filtering.

AI-input Validation

AI-based input validation can use natural language to define how a large variety of input should look like. Three possible methods exist for this input validation:

- Natural Language Inference (NLI)

- Siamese network

- Triplet loss

Natural Language Inference (NLI) classifies a premise-hypothesis pair into three possible classes: entailment, neutral, and contraction. A Siamese network or triplet loss approach, popular in the identity and biometric validation space, may also be used in the input validation space and outputs a float between 0 and 1.

For example, "it is a number" can successfully classify "fifty-five" and "55" as valid numbers that can be used in a prompt. In another more complex example, "it is a complaint" can successfully classify feedback into a separate prompt or function.

Why not use LLMs to validate inputs?

While carefully trained LLMs may work appropriately, the risks of prompt injection may be high. Techniques like delimiting "###" and brute-force prompting through trial and error provide little control over input type and format.